AIR-ML Lab @ CISPA

Led by Dr. Xiao Zhang, the AIR-ML lab conducts research on Adversarial, Interpretable, and Robust Machine Learning (AIR-ML), with the ultimate goal of building trustworthy AI systems that are both resilient to attacks and transparent in their decision-making. Specifically, we analyze the dynamics of ML models, develop principled tools to audit and address their vulnerabilities, and build trustworthy AI systems for real-world applications. Our lab is currently affilated with CISPA Helmholtz Center for Information Security and located in Saarbrücken, Germany.

Latest News

[Oct 2025]Our paper (DivTrackee versus DynTracker: Promoting Diversity in Anti-Facial Recognition against Dynamic FR Strategy) has been awarded the Distinguished Paper Award 🏆 at CCS 2025!

[Sep 2025]One paper accepted in NeurIPS 2025 on LLM jailbreaks! Congratulations to Advik!

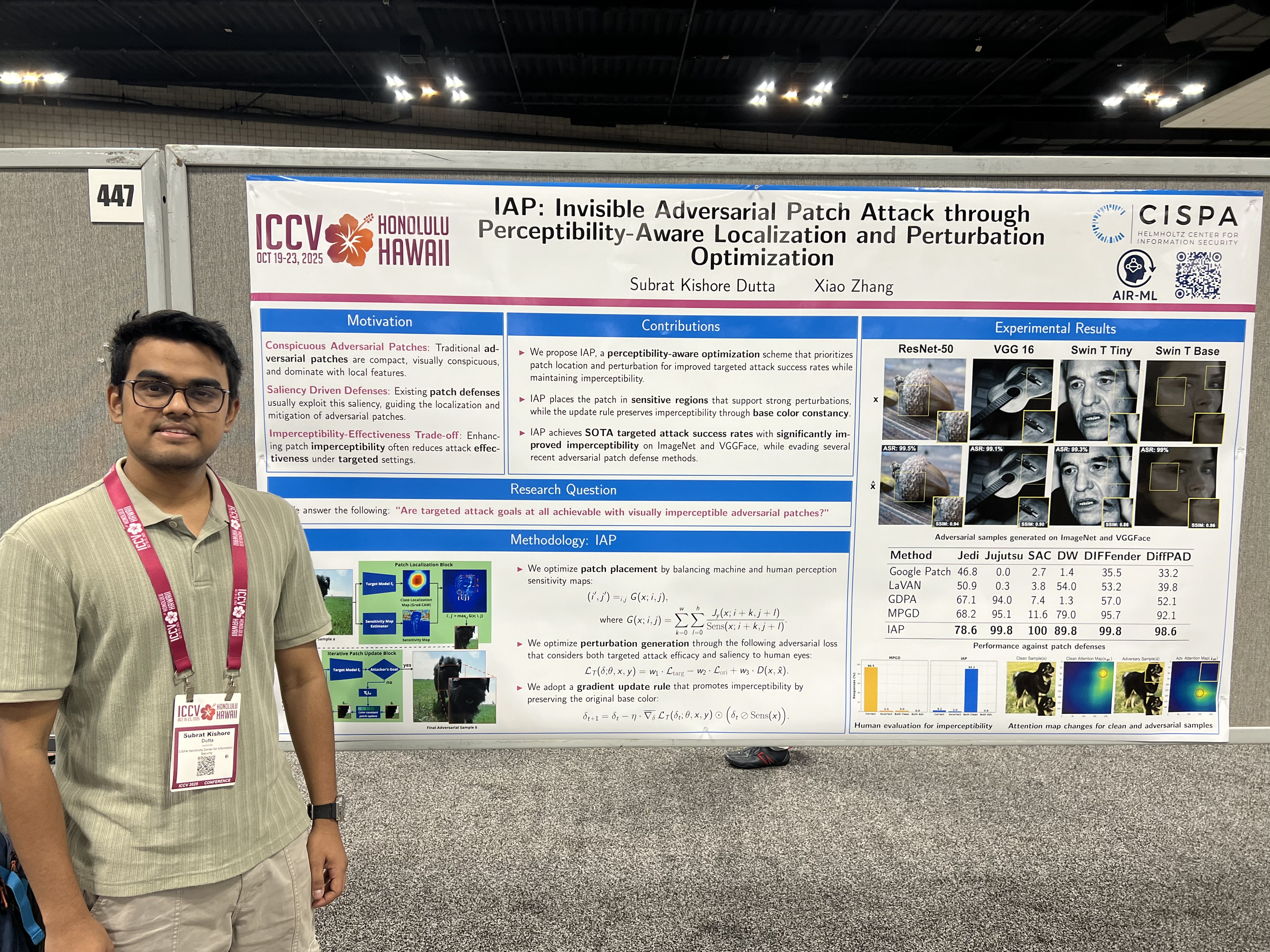

[Jun 2025]One paper accepted in ICCV 2025 on adversarial patches! Congratulations to Subrat!

[May 2025]One paper accepted in ICML 2025 on cost-sensitive robustness and randomized smoothing!

[May 2025]Subrat has joined AIR-ML lab as a PhD student 🎓. Welcome on board!

[Apr 2025]Congratulations to Yuelin for successfully passing her Q.E. 🎉!

[Mar 2025]One paper accepted in CCS 2025 on anti-facial recognition!

[Nov 2024]One paper accepted in TMLR on privacy auditing and membership inference!

[Oct 2024]Congratulations to Santanu for successfully passing his Q.E. 🎉!

[Oct 2024]One paper accepted in WACV 2025 on adversarial defenses on diffusion models!